Understanding Dataset-level Labeling Issues#

This 5-minute quickstart tutorial shows how cleanlab.dataset.health_summary() helps you automatically:

Score and rank the overall label quality of each class, useful for deciding whether to remove or keep certain classes.

Identify overlapping classes that you can merge to make the learning task less ambiguous. Alternatively use this information to refine your annotator instructions (e.g. more precisely defining the difference between two classes).

Generate an overall dataset and label quality health score to track improvements in your labels over time as you clean your datasets.

This tutorial does not study issues in individual data points, but rather global issues across the dataset. Much of the functionality demonstrated here can also be accessed via Datalab.get_info() when using Datalab to detect label issues.

Quickstart

Already have (out-of-sample) pred_probs from a model trained on your dataset? Run the code below to evaluate the overall health of your dataset and its labels.

from cleanlab.dataset import health_summary

health_summary(labels, pred_probs)

Install dependencies and import them#

You can use pip to install all packages required for this tutorial as follows:

!pip install requests

!pip install cleanlab

# Make sure to install the version corresponding to this tutorial

# E.g. if viewing master branch documentation:

# !pip install git+https://github.com/cleanlab/cleanlab.git

[2]:

import requests

import io

import cleanlab

import numpy as np

Fetch the data (can skip these details)#

See the code for fetching data (click to expand)

# Note: This pulldown content is for docs.cleanlab.ai, if running on local Jupyter or Colab, please ignore it.

mnist_test_set = ["0", "1" ,"2", "3", "4", "5", "6", "7", "8", "9"]

imagenet_val_set = ["tench", "goldfish", "great white shark", "tiger shark", "hammerhead shark", "electric ray", "stingray", "cock", "hen", "ostrich", "brambling", "goldfinch", "house finch", "junco", "indigo bunting", "American robin", "bulbul", "jay", "magpie", "chickadee", "American dipper", "kite", "bald eagle", "vulture", "great grey owl", "fire salamander", "smooth newt", "newt", "spotted salamander", "axolotl", "American bullfrog", "tree frog", "tailed frog", "loggerhead sea turtle", "leatherback sea turtle", "mud turtle", "terrapin", "box turtle", "banded gecko", "green iguana", "Carolina anole", "desert grassland whiptail lizard", "agama", "frilled-necked lizard", "alligator lizard", "Gila monster", "European green lizard", "chameleon", "Komodo dragon", "Nile crocodile", "American alligator", "triceratops", "worm snake", "ring-necked snake", "eastern hog-nosed snake", "smooth green snake", "kingsnake", "garter snake", "water snake", "vine snake", "night snake", "boa constrictor", "African rock python", "Indian cobra", "green mamba", "sea snake", "Saharan horned viper", "eastern diamondback rattlesnake", "sidewinder", "trilobite", "harvestman", "scorpion", "yellow garden spider", "barn spider", "European garden spider", "southern black widow", "tarantula", "wolf spider", "tick", "centipede", "black grouse", "ptarmigan", "ruffed grouse", "prairie grouse", "peacock", "quail", "partridge", "grey parrot", "macaw", "sulphur-crested cockatoo", "lorikeet", "coucal", "bee eater", "hornbill", "hummingbird", "jacamar", "toucan", "duck", "red-breasted merganser", "goose", "black swan", "tusker", "echidna", "platypus", "wallaby", "koala", "wombat", "jellyfish", "sea anemone", "brain coral", "flatworm", "nematode", "conch", "snail", "slug", "sea slug", "chiton", "chambered nautilus", "Dungeness crab", "rock crab", "fiddler crab", "red king crab", "American lobster", "spiny lobster", "crayfish", "hermit crab", "isopod", "white stork", "black stork", "spoonbill", "flamingo", "little blue heron", "great egret", "bittern", "crane (bird)", "limpkin", "common gallinule", "American coot", "bustard", "ruddy turnstone", "dunlin", "common redshank", "dowitcher", "oystercatcher", "pelican", "king penguin", "albatross", "grey whale", "killer whale", "dugong", "sea lion", "Chihuahua", "Japanese Chin", "Maltese", "Pekingese", "Shih Tzu", "King Charles Spaniel", "Papillon", "toy terrier", "Rhodesian Ridgeback", "Afghan Hound", "Basset Hound", "Beagle", "Bloodhound", "Bluetick Coonhound", "Black and Tan Coonhound", "Treeing Walker Coonhound", "English foxhound", "Redbone Coonhound", "borzoi", "Irish Wolfhound", "Italian Greyhound", "Whippet", "Ibizan Hound", "Norwegian Elkhound", "Otterhound", "Saluki", "Scottish Deerhound", "Weimaraner", "Staffordshire Bull Terrier", "American Staffordshire Terrier", "Bedlington Terrier", "Border Terrier", "Kerry Blue Terrier", "Irish Terrier", "Norfolk Terrier", "Norwich Terrier", "Yorkshire Terrier", "Wire Fox Terrier", "Lakeland Terrier", "Sealyham Terrier", "Airedale Terrier", "Cairn Terrier", "Australian Terrier", "Dandie Dinmont Terrier", "Boston Terrier", "Miniature Schnauzer", "Giant Schnauzer", "Standard Schnauzer", "Scottish Terrier", "Tibetan Terrier", "Australian Silky Terrier", "Soft-coated Wheaten Terrier", "West Highland White Terrier", "Lhasa Apso", "Flat-Coated Retriever", "Curly-coated Retriever", "Golden Retriever", "Labrador Retriever", "Chesapeake Bay Retriever", "German Shorthaired Pointer", "Vizsla", "English Setter", "Irish Setter", "Gordon Setter", "Brittany", "Clumber Spaniel", "English Springer Spaniel", "Welsh Springer Spaniel", "Cocker Spaniels", "Sussex Spaniel", "Irish Water Spaniel", "Kuvasz", "Schipperke", "Groenendael", "Malinois", "Briard", "Australian Kelpie", "Komondor", "Old English Sheepdog", "Shetland Sheepdog", "collie", "Border Collie", "Bouvier des Flandres", "Rottweiler", "German Shepherd Dog", "Dobermann", "Miniature Pinscher", "Greater Swiss Mountain Dog", "Bernese Mountain Dog", "Appenzeller Sennenhund", "Entlebucher Sennenhund", "Boxer", "Bullmastiff", "Tibetan Mastiff", "French Bulldog", "Great Dane", "St. Bernard", "husky", "Alaskan Malamute", "Siberian Husky", "Dalmatian", "Affenpinscher", "Basenji", "pug", "Leonberger", "Newfoundland", "Pyrenean Mountain Dog", "Samoyed", "Pomeranian", "Chow Chow", "Keeshond", "Griffon Bruxellois", "Pembroke Welsh Corgi", "Cardigan Welsh Corgi", "Toy Poodle", "Miniature Poodle", "Standard Poodle", "Mexican hairless dog", "grey wolf", "Alaskan tundra wolf", "red wolf", "coyote", "dingo", "dhole", "African wild dog", "hyena", "red fox", "kit fox", "Arctic fox", "grey fox", "tabby cat", "tiger cat", "Persian cat", "Siamese cat", "Egyptian Mau", "cougar", "lynx", "leopard", "snow leopard", "jaguar", "lion", "tiger", "cheetah", "brown bear", "American black bear", "polar bear", "sloth bear", "mongoose", "meerkat", "tiger beetle", "ladybug", "ground beetle", "longhorn beetle", "leaf beetle", "dung beetle", "rhinoceros beetle", "weevil", "fly", "bee", "ant", "grasshopper", "cricket", "stick insect", "cockroach", "mantis", "cicada", "leafhopper", "lacewing", "dragonfly", "damselfly", "red admiral", "ringlet", "monarch butterfly", "small white", "sulphur butterfly", "gossamer-winged butterfly", "starfish", "sea urchin", "sea cucumber", "cottontail rabbit", "hare", "Angora rabbit", "hamster", "porcupine", "fox squirrel", "marmot", "beaver", "guinea pig", "common sorrel", "zebra", "pig", "wild boar", "warthog", "hippopotamus", "ox", "water buffalo", "bison", "ram", "bighorn sheep", "Alpine ibex", "hartebeest", "impala", "gazelle", "dromedary", "llama", "weasel", "mink", "European polecat", "black-footed ferret", "otter", "skunk", "badger", "armadillo", "three-toed sloth", "orangutan", "gorilla", "chimpanzee", "gibbon", "siamang", "guenon", "patas monkey", "baboon", "macaque", "langur", "black-and-white colobus", "proboscis monkey", "marmoset", "white-headed capuchin", "howler monkey", "titi", "Geoffroy's spider monkey", "common squirrel monkey", "ring-tailed lemur", "indri", "Asian elephant", "African bush elephant", "red panda", "giant panda", "snoek", "eel", "coho salmon", "rock beauty", "clownfish", "sturgeon", "garfish", "lionfish", "pufferfish", "abacus", "abaya", "academic gown", "accordion", "acoustic guitar", "aircraft carrier", "airliner", "airship", "altar", "ambulance", "amphibious vehicle", "analog clock", "apiary", "apron", "waste container", "assault rifle", "backpack", "bakery", "balance beam", "balloon", "ballpoint pen", "Band-Aid", "banjo", "baluster", "barbell", "barber chair", "barbershop", "barn", "barometer", "barrel", "wheelbarrow", "baseball", "basketball", "bassinet", "bassoon", "swimming cap", "bath towel", "bathtub", "station wagon", "lighthouse", "beaker", "military cap", "beer bottle", "beer glass", "bell-cot", "bib", "tandem bicycle", "bikini", "ring binder", "binoculars", "birdhouse", "boathouse", "bobsleigh", "bolo tie", "poke bonnet", "bookcase", "bookstore", "bottle cap", "bow", "bow tie", "brass", "bra", "breakwater", "breastplate", "broom", "bucket", "buckle", "bulletproof vest", "high-speed train", "butcher shop", "taxicab", "cauldron", "candle", "cannon", "canoe", "can opener", "cardigan", "car mirror", "carousel", "tool kit", "carton", "car wheel", "automated teller machine", "cassette", "cassette player", "castle", "catamaran", "CD player", "cello", "mobile phone", "chain", "chain-link fence", "chain mail", "chainsaw", "chest", "chiffonier", "chime", "china cabinet", "Christmas stocking", "church", "movie theater", "cleaver", "cliff dwelling", "cloak", "clogs", "cocktail shaker", "coffee mug", "coffeemaker", "coil", "combination lock", "computer keyboard", "confectionery store", "container ship", "convertible", "corkscrew", "cornet", "cowboy boot", "cowboy hat", "cradle", "crane (machine)", "crash helmet", "crate", "infant bed", "Crock Pot", "croquet ball", "crutch", "cuirass", "dam", "desk", "desktop computer", "rotary dial telephone", "diaper", "digital clock", "digital watch", "dining table", "dishcloth", "dishwasher", "disc brake", "dock", "dog sled", "dome", "doormat", "drilling rig", "drum", "drumstick", "dumbbell", "Dutch oven", "electric fan", "electric guitar", "electric locomotive", "entertainment center", "envelope", "espresso machine", "face powder", "feather boa", "filing cabinet", "fireboat", "fire engine", "fire screen sheet", "flagpole", "flute", "folding chair", "football helmet", "forklift", "fountain", "fountain pen", "four-poster bed", "freight car", "French horn", "frying pan", "fur coat", "garbage truck", "gas mask", "gas pump", "goblet", "go-kart", "golf ball", "golf cart", "gondola", "gong", "gown", "grand piano", "greenhouse", "grille", "grocery store", "guillotine", "barrette", "hair spray", "half-track", "hammer", "hamper", "hair dryer", "hand-held computer", "handkerchief", "hard disk drive", "harmonica", "harp", "harvester", "hatchet", "holster", "home theater", "honeycomb", "hook", "hoop skirt", "horizontal bar", "horse-drawn vehicle", "hourglass", "iPod", "clothes iron", "jack-o'-lantern", "jeans", "jeep", "T-shirt", "jigsaw puzzle", "pulled rickshaw", "joystick", "kimono", "knee pad", "knot", "lab coat", "ladle", "lampshade", "laptop computer", "lawn mower", "lens cap", "paper knife", "library", "lifeboat", "lighter", "limousine", "ocean liner", "lipstick", "slip-on shoe", "lotion", "speaker", "loupe", "sawmill", "magnetic compass", "mail bag", "mailbox", "tights", "tank suit", "manhole cover", "maraca", "marimba", "mask", "match", "maypole", "maze", "measuring cup", "medicine chest", "megalith", "microphone", "microwave oven", "military uniform", "milk can", "minibus", "miniskirt", "minivan", "missile", "mitten", "mixing bowl", "mobile home", "Model T", "modem", "monastery", "monitor", "moped", "mortar", "square academic cap", "mosque", "mosquito net", "scooter", "mountain bike", "tent", "computer mouse", "mousetrap", "moving van", "muzzle", "nail", "neck brace", "necklace", "nipple", "notebook computer", "obelisk", "oboe", "ocarina", "odometer", "oil filter", "organ", "oscilloscope", "overskirt", "bullock cart", "oxygen mask", "packet", "paddle", "paddle wheel", "padlock", "paintbrush", "pajamas", "palace", "pan flute", "paper towel", "parachute", "parallel bars", "park bench", "parking meter", "passenger car", "patio", "payphone", "pedestal", "pencil case", "pencil sharpener", "perfume", "Petri dish", "photocopier", "plectrum", "Pickelhaube", "picket fence", "pickup truck", "pier", "piggy bank", "pill bottle", "pillow", "ping-pong ball", "pinwheel", "pirate ship", "pitcher", "hand plane", "planetarium", "plastic bag", "plate rack", "plow", "plunger", "Polaroid camera", "pole", "police van", "poncho", "billiard table", "soda bottle", "pot", "potter's wheel", "power drill", "prayer rug", "printer", "prison", "projectile", "projector", "hockey puck", "punching bag", "purse", "quill", "quilt", "race car", "racket", "radiator", "radio", "radio telescope", "rain barrel", "recreational vehicle", "reel", "reflex camera", "refrigerator", "remote control", "restaurant", "revolver", "rifle", "rocking chair", "rotisserie", "eraser", "rugby ball", "ruler", "running shoe", "safe", "safety pin", "salt shaker", "sandal", "sarong", "saxophone", "scabbard", "weighing scale", "school bus", "schooner", "scoreboard", "CRT screen", "screw", "screwdriver", "seat belt", "sewing machine", "shield", "shoe store", "shoji", "shopping basket", "shopping cart", "shovel", "shower cap", "shower curtain", "ski", "ski mask", "sleeping bag", "slide rule", "sliding door", "slot machine", "snorkel", "snowmobile", "snowplow", "soap dispenser", "soccer ball", "sock", "solar thermal collector", "sombrero", "soup bowl", "space bar", "space heater", "space shuttle", "spatula", "motorboat", "spider web", "spindle", "sports car", "spotlight", "stage", "steam locomotive", "through arch bridge", "steel drum", "stethoscope", "scarf", "stone wall", "stopwatch", "stove", "strainer", "tram", "stretcher", "couch", "stupa", "submarine", "suit", "sundial", "sunglass", "sunglasses", "sunscreen", "suspension bridge", "mop", "sweatshirt", "swimsuit", "swing", "switch", "syringe", "table lamp", "tank", "tape player", "teapot", "teddy bear", "television", "tennis ball", "thatched roof", "front curtain", "thimble", "threshing machine", "throne", "tile roof", "toaster", "tobacco shop", "toilet seat", "torch", "totem pole", "tow truck", "toy store", "tractor", "semi-trailer truck", "tray", "trench coat", "tricycle", "trimaran", "tripod", "triumphal arch", "trolleybus", "trombone", "tub", "turnstile", "typewriter keyboard", "umbrella", "unicycle", "upright piano", "vacuum cleaner", "vase", "vault", "velvet", "vending machine", "vestment", "viaduct", "violin", "volleyball", "waffle iron", "wall clock", "wallet", "wardrobe", "military aircraft", "sink", "washing machine", "water bottle", "water jug", "water tower", "whiskey jug", "whistle", "wig", "window screen", "window shade", "Windsor tie", "wine bottle", "wing", "wok", "wooden spoon", "wool", "split-rail fence", "shipwreck", "yawl", "yurt", "website", "comic book", "crossword", "traffic sign", "traffic light", "dust jacket", "menu", "plate", "guacamole", "consomme", "hot pot", "trifle", "ice cream", "ice pop", "baguette", "bagel", "pretzel", "cheeseburger", "hot dog", "mashed potato", "cabbage", "broccoli", "cauliflower", "zucchini", "spaghetti squash", "acorn squash", "butternut squash", "cucumber", "artichoke", "bell pepper", "cardoon", "mushroom", "Granny Smith", "strawberry", "orange", "lemon", "fig", "pineapple", "banana", "jackfruit", "custard apple", "pomegranate", "hay", "carbonara", "chocolate syrup", "dough", "meatloaf", "pizza", "pot pie", "burrito", "red wine", "espresso", "cup", "eggnog", "alp", "bubble", "cliff", "coral reef", "geyser", "lakeshore", "promontory", "shoal", "seashore", "valley", "volcano", "baseball player", "bridegroom", "scuba diver", "rapeseed", "daisy", "yellow lady's slipper", "corn", "acorn", "rose hip", "horse chestnut seed", "coral fungus", "agaric", "gyromitra", "stinkhorn mushroom", "earth star", "hen-of-the-woods", "bolete", "ear", "toilet paper"]

cifar10_test_set = ["airplane", "automobile", "bird", "cat", "deer", "dog", "frog", "horse", "ship", "truck"]

cifar100_test_set = ['apple', 'aquarium_fish', 'baby', 'bear', 'beaver', 'bed', 'bee', 'beetle', 'bicycle', 'bottle', 'bowl', 'boy', 'bridge', 'bus', 'butterfly', 'camel', 'can', 'castle', 'caterpillar', 'cattle', 'chair', 'chimpanzee', 'clock', 'cloud', 'cockroach', 'couch', 'crab', 'crocodile', 'cup', 'dinosaur', 'dolphin', 'elephant', 'flatfish', 'forest', 'fox', 'girl', 'hamster', 'house', 'kangaroo', 'keyboard', 'lamp', 'lawn_mower', 'leopard', 'lion', 'lizard', 'lobster', 'man', 'maple_tree', 'motorcycle', 'mountain', 'mouse', 'mushroom', 'oak_tree', 'orange', 'orchid', 'otter', 'palm_tree', 'pear', 'pickup_truck', 'pine_tree', 'plain', 'plate', 'poppy', 'porcupine', 'possum', 'rabbit', 'raccoon', 'ray', 'road', 'rocket', 'rose', 'sea', 'seal', 'shark', 'shrew', 'skunk', 'skyscraper', 'snail', 'snake', 'spider', 'squirrel', 'streetcar', 'sunflower', 'sweet_pepper', 'table', 'tank', 'telephone', 'television', 'tiger', 'tractor', 'train', 'trout', 'tulip', 'turtle', 'wardrobe', 'whale', 'willow_tree', 'wolf', 'woman', 'worm']

caltech256 = ["ak47", "american-flag", "backpack", "baseball-bat", "baseball-glove", "basketball-hoop", "bat", "bathtub", "bear", "beer-mug", "billiards", "binoculars", "birdbath", "blimp", "bonsai", "boom-box", "bowling-ball", "bowling-pin", "boxing-glove", "brain", "breadmaker", "buddha", "bulldozer", "butterfly", "cactus", "cake", "calculator", "camel", "cannon", "canoe", "car-tire", "cartman", "cd", "centipede", "cereal-box", "chandelier", "chess-board", "chimp", "chopsticks", "cockroach", "coffee-mug", "coffin", "coin", "comet", "computer-keyboard", "computer-monitor", "computer-mouse", "conch", "cormorant", "covered-wagon", "cowboy-hat", "crab", "desk-globe", "diamond-ring", "dice", "dog", "dolphin", "doorknob", "drinking-straw", "duck", "dumb-bell", "eiffel-tower", "electric-guitar", "elephant", "elk", "ewer", "eyeglasses", "fern", "fighter-jet", "fire-extinguisher", "fire-hydrant", "fire-truck", "fireworks", "flashlight", "floppy-disk", "football-helmet", "french-horn", "fried-egg", "frisbee", "frog", "frying-pan", "galaxy", "gas-pump", "giraffe", "goat", "golden-gate-bridge", "goldfish", "golf-ball", "goose", "gorilla", "grand-piano", "grapes", "grasshopper", "guitar-pick", "hamburger", "hammock", "harmonica", "harp", "harpsichord", "hawksbill", "head-phones", "helicopter", "hibiscus", "homer-simpson", "horse", "horseshoe-crab", "hot-air-balloon", "hot-dog", "hot-tub", "hourglass", "house-fly", "human-skeleton", "hummingbird", "ibis", "ice-cream-cone", "iguana", "ipod", "iris", "jesus-christ", "joy-stick", "kangaroo", "kayak", "ketch", "killer-whale", "knife", "ladder", "laptop", "lathe", "leopards", "license-plate", "lightbulb", "light-house", "lightning", "llama", "mailbox", "mandolin", "mars", "mattress", "megaphone", "menorah", "microscope", "microwave", "minaret", "minotaur", "motorbikes", "mountain-bike", "mushroom", "mussels", "necktie", "octopus", "ostrich", "owl", "palm-pilot", "palm-tree", "paperclip", "paper-shredder", "pci-card", "penguin", "people", "pez-dispenser", "photocopier", "picnic-table", "playing-card", "porcupine", "pram", "praying-mantis", "pyramid", "raccoon", "radio-telescope", "rainbow", "refrigerator", "revolver", "rifle", "rotary-phone", "roulette-wheel", "saddle", "saturn", "school-bus", "scorpion", "screwdriver", "segway", "self-propelled-lawn-mower", "sextant", "sheet-music", "skateboard", "skunk", "skyscraper", "smokestack", "snail", "snake", "sneaker", "snowmobile", "soccer-ball", "socks", "soda-can", "spaghetti", "speed-boat", "spider", "spoon", "stained-glass", "starfish", "steering-wheel", "stirrups", "sunflower", "superman", "sushi", "swan", "swiss-army-knife", "sword", "syringe", "tambourine", "teapot", "teddy-bear", "teepee", "telephone-box", "tennis-ball", "tennis-court", "tennis-racket", "theodolite", "toaster", "tomato", "tombstone", "top-hat", "touring-bike", "tower-pisa", "traffic-light", "treadmill", "triceratops", "tricycle", "trilobite", "tripod", "t-shirt", "tuning-fork", "tweezer", "umbrella", "unicorn", "vcr", "video-projector", "washing-machine", "watch", "waterfall", "watermelon", "welding-mask", "wheelbarrow", "windmill", "wine-bottle", "xylophone", "yarmulke", "yo-yo", "zebra", "airplanes", "car-side", "faces-easy", "greyhound", "tennis-shoes", "toad"]

twenty_news_test_set = ['alt.atheism', 'comp.graphics', 'comp.os.ms-windows.misc', 'comp.sys.ibm.pc.hardware', 'comp.sys.mac.hardware', 'comp.windows.x', 'misc.forsale', 'rec.autos', 'rec.motorcycles', 'rec.sport.baseball', 'rec.sport.hockey', 'sci.crypt', 'sci.electronics', 'sci.med', 'sci.space', 'soc.religion.christian', 'talk.politics.guns', 'talk.politics.mideast', 'talk.politics.misc', 'talk.religion.misc']

amazon = ['Negative', 'Neutral', 'Positive']

imdb_test_set = ["Negative", "Positive"]

ALL_CLASSES = {

'imagenet_val_set': imagenet_val_set,

'caltech256': caltech256,

'mnist_test_set': mnist_test_set,

'cifar10_test_set': cifar10_test_set,

'cifar100_test_set': cifar100_test_set,

'imdb_test_set': imdb_test_set,

'20news_test_set': twenty_news_test_set,

'amazon': amazon,

}

def _load_classes_predprobs_labels(dataset_name):

"""Helper function to load data from the labelerrors.com datasets."""

base = 'https://github.com/cleanlab/label-errors/raw/'

url_base = base + '5392f6c71473055060be3044becdde1cbc18284d'

url_labels = url_base + '/original_test_labels/{}_original_labels.npy'

url_probs = url_base + '/cross_validated_predicted_probabilities/{}_pyx.npy'

NUM_PARTS = {'amazon': 3, 'imagenet_val_set': 4} # pred_probs files broken up into parts for larger datatsets

response = requests.get(url_labels.format(dataset_name))

labels = np.load(io.BytesIO(response.content), allow_pickle=True)

if dataset_name in NUM_PARTS:

pred_probs_parts = []

for i in range(1, NUM_PARTS[dataset_name] + 1):

url = url_probs.format(dataset_name).replace(

'.npy',

f'.part{i}_of_{NUM_PARTS[dataset_name]}.npy',

)

response = requests.get(url)

pred_probs_parts.append(

np.load(io.BytesIO(response.content), allow_pickle=True))

pred_probs = np.vstack(pred_probs_parts)

else:

response = requests.get(url_probs.format(dataset_name))

pred_probs = np.load(io.BytesIO(response.content), allow_pickle=True)

print(f"\nLoaded the '{dataset_name}' dataset with predicted "

f"probabilities of shape {pred_probs.shape}\n")

return pred_probs, labels, ALL_CLASSES[dataset_name]

Start of tutorial: Evaluate the health of 8 popular datasets#

This tutorial shows the output of running cleanlab.dataset.health_summary() on 8 popular datasets below:

5 image datasets: ImageNet, Caltech256, MNIST, CIFAR-10, CIFAR-100

3 text datasets: IMDB Reviews, 20 News Groups, Amazon Reviews

cleanlab.dataset.health_summary() works with several kinds of inputs (see docstring). In this tutorial, we input:

out-of-sample predicted probabilities (e.g. computed via cross-validation)

labels (can contain label errors and various issues)

For the 8 datasets, we’ve precomputed and loaded these for you. See labelerrors.com for more info about the label issues in these datasets.

Want more interpretability?

Pass in a list of class names ordered by their indices into the class_names argument in cleanlab.dataset.health_summary().

[4]:

DATASETS = ['caltech256', 'mnist_test_set', 'cifar10_test_set', 'cifar100_test_set', '20news_test_set']

for dataset_name in DATASETS:

print("\n🎯 " + dataset_name.capitalize() + " 🎯\n")

# load class names, given labels, and predicted probabilities from already-trained model

pred_probs, labels, class_names = _load_classes_predprobs_labels(dataset_name)

# run 1 line of code to evaluate the health of your dataset

_ = cleanlab.dataset.health_summary(labels, pred_probs, class_names=class_names)

🎯 Caltech256 🎯

Loaded the 'caltech256' dataset with predicted probabilities of shape (29780, 256)

-------------------------------------------------------------

| Generating a Cleanlab Dataset Health Summary |

| for your dataset with 29,780 examples and 256 classes. |

| Note, Cleanlab is not a medical doctor... yet. |

-------------------------------------------------------------

Overall Class Quality and Noise across your dataset (below)

------------------------------------------------------------

| Class Name | Class Index | Label Issues | Inverse Label Issues | Label Noise | Inverse Label Noise | Label Quality Score | |

|---|---|---|---|---|---|---|---|

| 0 | tennis-shoes | 254 | 37 | 33 | 0.359223 | 0.333333 | 0.640777 |

| 1 | skateboard | 184 | 37 | 23 | 0.359223 | 0.258427 | 0.640777 |

| 2 | chopsticks | 38 | 29 | 20 | 0.341176 | 0.263158 | 0.658824 |

| 3 | drinking-straw | 58 | 28 | 18 | 0.337349 | 0.246575 | 0.662651 |

| 4 | yo-yo | 248 | 33 | 37 | 0.330000 | 0.355769 | 0.670000 |

| ... | ... | ... | ... | ... | ... | ... | ... |

| 251 | raccoon | 167 | 0 | 0 | 0.000000 | 0.000000 | 1.000000 |

| 252 | hummingbird | 112 | 0 | 0 | 0.000000 | 0.000000 | 1.000000 |

| 253 | hourglass | 109 | 0 | 2 | 0.000000 | 0.022989 | 1.000000 |

| 254 | starfish | 200 | 0 | 0 | 0.000000 | 0.000000 | 1.000000 |

| 255 | saturn | 176 | 0 | 5 | 0.000000 | 0.049505 | 1.000000 |

256 rows × 7 columns

Class Overlap. In some cases, you may want to merge classes in the top rows (below)

-----------------------------------------------------------------------------------

| Class Name A | Class Name B | Class Index A | Class Index B | Num Overlapping Examples | Joint Probability | |

|---|---|---|---|---|---|---|

| 0 | sneaker | tennis-shoes | 190 | 254 | 66 | 0.002216 |

| 1 | frisbee | yo-yo | 78 | 248 | 29 | 0.000974 |

| 2 | duck | goose | 59 | 88 | 26 | 0.000873 |

| 3 | beer-mug | coffee-mug | 9 | 40 | 22 | 0.000739 |

| 4 | frog | toad | 79 | 255 | 22 | 0.000739 |

| ... | ... | ... | ... | ... | ... | ... |

| 32635 | cormorant | covered-wagon | 48 | 49 | 0 | 0.000000 |

| 32636 | conch | toad | 47 | 255 | 0 | 0.000000 |

| 32637 | conch | tennis-shoes | 47 | 254 | 0 | 0.000000 |

| 32638 | conch | greyhound | 47 | 253 | 0 | 0.000000 |

| 32639 | tennis-shoes | toad | 254 | 255 | 0 | 0.000000 |

32640 rows × 6 columns

* Overall, about 7% (2,051 of the 29,780) labels in your dataset have potential issues.

** The overall label health score for this dataset is: 0.93.

Generated with <3 from Cleanlab.

🎯 Mnist_test_set 🎯

Loaded the 'mnist_test_set' dataset with predicted probabilities of shape (10000, 10)

------------------------------------------------------------

| Generating a Cleanlab Dataset Health Summary |

| for your dataset with 10,000 examples and 10 classes. |

| Note, Cleanlab is not a medical doctor... yet. |

------------------------------------------------------------

Overall Class Quality and Noise across your dataset (below)

------------------------------------------------------------

| Class Name | Class Index | Label Issues | Inverse Label Issues | Label Noise | Inverse Label Noise | Label Quality Score | |

|---|---|---|---|---|---|---|---|

| 0 | 5 | 5 | 2 | 2 | 0.002242 | 0.002242 | 0.997758 |

| 1 | 6 | 6 | 2 | 1 | 0.002088 | 0.001045 | 0.997912 |

| 2 | 8 | 8 | 2 | 0 | 0.002053 | 0.000000 | 0.997947 |

| 3 | 3 | 3 | 2 | 1 | 0.001980 | 0.000991 | 0.998020 |

| 4 | 7 | 7 | 2 | 3 | 0.001946 | 0.002915 | 0.998054 |

| 5 | 2 | 2 | 2 | 3 | 0.001938 | 0.002904 | 0.998062 |

| 6 | 0 | 0 | 1 | 1 | 0.001020 | 0.001020 | 0.998980 |

| 7 | 4 | 4 | 1 | 2 | 0.001018 | 0.002035 | 0.998982 |

| 8 | 9 | 9 | 1 | 2 | 0.000991 | 0.001980 | 0.999009 |

| 9 | 1 | 1 | 0 | 0 | 0.000000 | 0.000000 | 1.000000 |

Class Overlap. In some cases, you may want to merge classes in the top rows (below)

-----------------------------------------------------------------------------------

| Class Name A | Class Name B | Class Index A | Class Index B | Num Overlapping Examples | Joint Probability | |

|---|---|---|---|---|---|---|

| 0 | 2 | 7 | 2 | 7 | 3 | 0.0003 |

| 1 | 5 | 6 | 5 | 6 | 2 | 0.0002 |

| 2 | 4 | 9 | 4 | 9 | 2 | 0.0002 |

| 3 | 3 | 5 | 3 | 5 | 2 | 0.0002 |

| 4 | 2 | 8 | 2 | 8 | 1 | 0.0001 |

| 5 | 4 | 6 | 4 | 6 | 1 | 0.0001 |

| 6 | 3 | 7 | 3 | 7 | 1 | 0.0001 |

| 7 | 0 | 2 | 0 | 2 | 1 | 0.0001 |

| 8 | 8 | 9 | 8 | 9 | 1 | 0.0001 |

| 9 | 0 | 7 | 0 | 7 | 1 | 0.0001 |

| 10 | 1 | 2 | 1 | 2 | 0 | 0.0000 |

| 11 | 3 | 8 | 3 | 8 | 0 | 0.0000 |

| 12 | 4 | 5 | 4 | 5 | 0 | 0.0000 |

| 13 | 0 | 5 | 0 | 5 | 0 | 0.0000 |

| 14 | 4 | 7 | 4 | 7 | 0 | 0.0000 |

| 15 | 4 | 8 | 4 | 8 | 0 | 0.0000 |

| 16 | 0 | 4 | 0 | 4 | 0 | 0.0000 |

| 17 | 0 | 3 | 0 | 3 | 0 | 0.0000 |

| 18 | 5 | 7 | 5 | 7 | 0 | 0.0000 |

| 19 | 5 | 8 | 5 | 8 | 0 | 0.0000 |

| 20 | 5 | 9 | 5 | 9 | 0 | 0.0000 |

| 21 | 6 | 7 | 6 | 7 | 0 | 0.0000 |

| 22 | 6 | 8 | 6 | 8 | 0 | 0.0000 |

| 23 | 6 | 9 | 6 | 9 | 0 | 0.0000 |

| 24 | 7 | 8 | 7 | 8 | 0 | 0.0000 |

| 25 | 7 | 9 | 7 | 9 | 0 | 0.0000 |

| 26 | 3 | 9 | 3 | 9 | 0 | 0.0000 |

| 27 | 0 | 6 | 0 | 6 | 0 | 0.0000 |

| 28 | 1 | 3 | 1 | 3 | 0 | 0.0000 |

| 29 | 2 | 3 | 2 | 3 | 0 | 0.0000 |

| 30 | 1 | 4 | 1 | 4 | 0 | 0.0000 |

| 31 | 1 | 5 | 1 | 5 | 0 | 0.0000 |

| 32 | 1 | 6 | 1 | 6 | 0 | 0.0000 |

| 33 | 1 | 7 | 1 | 7 | 0 | 0.0000 |

| 34 | 1 | 8 | 1 | 8 | 0 | 0.0000 |

| 35 | 1 | 9 | 1 | 9 | 0 | 0.0000 |

| 36 | 2 | 4 | 2 | 4 | 0 | 0.0000 |

| 37 | 3 | 6 | 3 | 6 | 0 | 0.0000 |

| 38 | 2 | 5 | 2 | 5 | 0 | 0.0000 |

| 39 | 2 | 6 | 2 | 6 | 0 | 0.0000 |

| 40 | 0 | 9 | 0 | 9 | 0 | 0.0000 |

| 41 | 0 | 8 | 0 | 8 | 0 | 0.0000 |

| 42 | 2 | 9 | 2 | 9 | 0 | 0.0000 |

| 43 | 3 | 4 | 3 | 4 | 0 | 0.0000 |

| 44 | 0 | 1 | 0 | 1 | 0 | 0.0000 |

* Overall, about 0% (15 of the 10,000) labels in your dataset have potential issues.

** The overall label health score for this dataset is: 1.00.

Generated with <3 from Cleanlab.

🎯 Cifar10_test_set 🎯

Loaded the 'cifar10_test_set' dataset with predicted probabilities of shape (10000, 10)

------------------------------------------------------------

| Generating a Cleanlab Dataset Health Summary |

| for your dataset with 10,000 examples and 10 classes. |

| Note, Cleanlab is not a medical doctor... yet. |

------------------------------------------------------------

Overall Class Quality and Noise across your dataset (below)

------------------------------------------------------------

| Class Name | Class Index | Label Issues | Inverse Label Issues | Label Noise | Inverse Label Noise | Label Quality Score | |

|---|---|---|---|---|---|---|---|

| 0 | cat | 3 | 71 | 67 | 0.071 | 0.067269 | 0.929 |

| 1 | dog | 5 | 46 | 59 | 0.046 | 0.058243 | 0.954 |

| 2 | bird | 2 | 35 | 32 | 0.035 | 0.032096 | 0.965 |

| 3 | truck | 9 | 31 | 12 | 0.031 | 0.012232 | 0.969 |

| 4 | deer | 4 | 22 | 26 | 0.022 | 0.025896 | 0.978 |

| 5 | frog | 6 | 20 | 13 | 0.020 | 0.013092 | 0.980 |

| 6 | automobile | 1 | 18 | 13 | 0.018 | 0.013065 | 0.982 |

| 7 | airplane | 0 | 16 | 31 | 0.016 | 0.030542 | 0.984 |

| 8 | ship | 8 | 13 | 21 | 0.013 | 0.020833 | 0.987 |

| 9 | horse | 7 | 12 | 10 | 0.012 | 0.010020 | 0.988 |

Class Overlap. In some cases, you may want to merge classes in the top rows (below)

-----------------------------------------------------------------------------------

| Class Name A | Class Name B | Class Index A | Class Index B | Num Overlapping Examples | Joint Probability | |

|---|---|---|---|---|---|---|

| 0 | cat | dog | 3 | 5 | 73 | 0.0073 |

| 1 | automobile | truck | 1 | 9 | 20 | 0.0020 |

| 2 | bird | cat | 2 | 3 | 20 | 0.0020 |

| 3 | airplane | ship | 0 | 8 | 16 | 0.0016 |

| 4 | bird | deer | 2 | 4 | 15 | 0.0015 |

| 5 | deer | dog | 4 | 5 | 14 | 0.0014 |

| 6 | cat | frog | 3 | 6 | 13 | 0.0013 |

| 7 | bird | frog | 2 | 6 | 13 | 0.0013 |

| 8 | cat | deer | 3 | 4 | 12 | 0.0012 |

| 9 | airplane | cat | 0 | 3 | 10 | 0.0010 |

| 10 | airplane | truck | 0 | 9 | 8 | 0.0008 |

| 11 | ship | truck | 8 | 9 | 7 | 0.0007 |

| 12 | bird | dog | 2 | 5 | 7 | 0.0007 |

| 13 | dog | horse | 5 | 7 | 6 | 0.0006 |

| 14 | cat | horse | 3 | 7 | 6 | 0.0006 |

| 15 | airplane | bird | 0 | 2 | 5 | 0.0005 |

| 16 | airplane | automobile | 0 | 1 | 5 | 0.0005 |

| 17 | automobile | ship | 1 | 8 | 4 | 0.0004 |

| 18 | cat | ship | 3 | 8 | 3 | 0.0003 |

| 19 | horse | truck | 7 | 9 | 3 | 0.0003 |

| 20 | deer | horse | 4 | 7 | 3 | 0.0003 |

| 21 | deer | frog | 4 | 6 | 3 | 0.0003 |

| 22 | bird | ship | 2 | 8 | 3 | 0.0003 |

| 23 | bird | horse | 2 | 7 | 3 | 0.0003 |

| 24 | dog | truck | 5 | 9 | 2 | 0.0002 |

| 25 | automobile | frog | 1 | 6 | 1 | 0.0001 |

| 26 | airplane | horse | 0 | 7 | 1 | 0.0001 |

| 27 | airplane | frog | 0 | 6 | 1 | 0.0001 |

| 28 | cat | truck | 3 | 9 | 1 | 0.0001 |

| 29 | airplane | dog | 0 | 5 | 1 | 0.0001 |

| 30 | automobile | dog | 1 | 5 | 1 | 0.0001 |

| 31 | bird | truck | 2 | 9 | 1 | 0.0001 |

| 32 | deer | truck | 4 | 9 | 1 | 0.0001 |

| 33 | dog | frog | 5 | 6 | 1 | 0.0001 |

| 34 | frog | ship | 6 | 8 | 1 | 0.0001 |

| 35 | horse | ship | 7 | 8 | 0 | 0.0000 |

| 36 | frog | truck | 6 | 9 | 0 | 0.0000 |

| 37 | frog | horse | 6 | 7 | 0 | 0.0000 |

| 38 | automobile | deer | 1 | 4 | 0 | 0.0000 |

| 39 | dog | ship | 5 | 8 | 0 | 0.0000 |

| 40 | airplane | deer | 0 | 4 | 0 | 0.0000 |

| 41 | automobile | horse | 1 | 7 | 0 | 0.0000 |

| 42 | automobile | bird | 1 | 2 | 0 | 0.0000 |

| 43 | automobile | cat | 1 | 3 | 0 | 0.0000 |

| 44 | deer | ship | 4 | 8 | 0 | 0.0000 |

* Overall, about 2% (244 of the 10,000) labels in your dataset have potential issues.

** The overall label health score for this dataset is: 0.98.

Generated with <3 from Cleanlab.

🎯 Cifar100_test_set 🎯

Loaded the 'cifar100_test_set' dataset with predicted probabilities of shape (10000, 100)

-------------------------------------------------------------

| Generating a Cleanlab Dataset Health Summary |

| for your dataset with 10,000 examples and 100 classes. |

| Note, Cleanlab is not a medical doctor... yet. |

-------------------------------------------------------------

Overall Class Quality and Noise across your dataset (below)

------------------------------------------------------------

| Class Name | Class Index | Label Issues | Inverse Label Issues | Label Noise | Inverse Label Noise | Label Quality Score | |

|---|---|---|---|---|---|---|---|

| 0 | boy | 11 | 54 | 38 | 0.54 | 0.452381 | 0.46 |

| 1 | girl | 35 | 53 | 40 | 0.53 | 0.459770 | 0.47 |

| 2 | seal | 72 | 49 | 56 | 0.49 | 0.523364 | 0.51 |

| 3 | man | 46 | 45 | 47 | 0.45 | 0.460784 | 0.55 |

| 4 | shark | 73 | 43 | 46 | 0.43 | 0.446602 | 0.57 |

| ... | ... | ... | ... | ... | ... | ... | ... |

| 95 | road | 68 | 5 | 11 | 0.05 | 0.103774 | 0.95 |

| 96 | skunk | 75 | 5 | 3 | 0.05 | 0.030612 | 0.95 |

| 97 | orange | 53 | 3 | 12 | 0.03 | 0.110092 | 0.97 |

| 98 | motorcycle | 48 | 3 | 5 | 0.03 | 0.049020 | 0.97 |

| 99 | wardrobe | 94 | 3 | 5 | 0.03 | 0.049020 | 0.97 |

100 rows × 7 columns

Class Overlap. In some cases, you may want to merge classes in the top rows (below)

-----------------------------------------------------------------------------------

| Class Name A | Class Name B | Class Index A | Class Index B | Num Overlapping Examples | Joint Probability | |

|---|---|---|---|---|---|---|

| 0 | girl | woman | 35 | 98 | 34 | 0.0034 |

| 1 | boy | man | 11 | 46 | 32 | 0.0032 |

| 2 | maple_tree | willow_tree | 47 | 96 | 26 | 0.0026 |

| 3 | maple_tree | oak_tree | 47 | 52 | 25 | 0.0025 |

| 4 | otter | seal | 55 | 72 | 25 | 0.0025 |

| ... | ... | ... | ... | ... | ... | ... |

| 4945 | cattle | whale | 19 | 95 | 0 | 0.0000 |

| 4946 | cattle | willow_tree | 19 | 96 | 0 | 0.0000 |

| 4947 | cattle | woman | 19 | 98 | 0 | 0.0000 |

| 4948 | cattle | worm | 19 | 99 | 0 | 0.0000 |

| 4949 | woman | worm | 98 | 99 | 0 | 0.0000 |

4950 rows × 6 columns

* Overall, about 18% (1,846 of the 10,000) labels in your dataset have potential issues.

** The overall label health score for this dataset is: 0.82.

Generated with <3 from Cleanlab.

🎯 20news_test_set 🎯

Loaded the '20news_test_set' dataset with predicted probabilities of shape (7532, 20)

-----------------------------------------------------------

| Generating a Cleanlab Dataset Health Summary |

| for your dataset with 7,532 examples and 20 classes. |

| Note, Cleanlab is not a medical doctor... yet. |

-----------------------------------------------------------

Overall Class Quality and Noise across your dataset (below)

------------------------------------------------------------

| Class Name | Class Index | Label Issues | Inverse Label Issues | Label Noise | Inverse Label Noise | Label Quality Score | |

|---|---|---|---|---|---|---|---|

| 0 | alt.atheism | 0 | 11 | 3 | 0.034483 | 0.009646 | 0.965517 |

| 1 | comp.os.ms-windows.misc | 2 | 12 | 8 | 0.030457 | 0.020513 | 0.969543 |

| 2 | comp.sys.ibm.pc.hardware | 3 | 11 | 14 | 0.028061 | 0.035443 | 0.971939 |

| 3 | comp.windows.x | 5 | 10 | 2 | 0.025316 | 0.005168 | 0.974684 |

| 4 | misc.forsale | 6 | 8 | 20 | 0.020513 | 0.049751 | 0.979487 |

| 5 | talk.religion.misc | 19 | 5 | 11 | 0.019920 | 0.042802 | 0.980080 |

| 6 | rec.autos | 7 | 7 | 2 | 0.017677 | 0.005115 | 0.982323 |

| 7 | comp.sys.mac.hardware | 4 | 5 | 2 | 0.012987 | 0.005236 | 0.987013 |

| 8 | sci.electronics | 12 | 5 | 10 | 0.012723 | 0.025126 | 0.987277 |

| 9 | talk.politics.guns | 16 | 4 | 3 | 0.010989 | 0.008264 | 0.989011 |

| 10 | comp.graphics | 1 | 4 | 11 | 0.010283 | 0.027778 | 0.989717 |

| 11 | talk.politics.misc | 18 | 3 | 0 | 0.009677 | 0.000000 | 0.990323 |

| 12 | sci.space | 14 | 3 | 4 | 0.007614 | 0.010127 | 0.992386 |

| 13 | sci.crypt | 11 | 2 | 2 | 0.005051 | 0.005051 | 0.994949 |

| 14 | sci.med | 13 | 2 | 2 | 0.005051 | 0.005051 | 0.994949 |

| 15 | rec.motorcycles | 8 | 2 | 0 | 0.005025 | 0.000000 | 0.994975 |

| 16 | rec.sport.hockey | 10 | 2 | 0 | 0.005013 | 0.000000 | 0.994987 |

| 17 | soc.religion.christian | 15 | 0 | 0 | 0.000000 | 0.000000 | 1.000000 |

| 18 | talk.politics.mideast | 17 | 0 | 0 | 0.000000 | 0.000000 | 1.000000 |

| 19 | rec.sport.baseball | 9 | 0 | 2 | 0.000000 | 0.005013 | 1.000000 |

Class Overlap. In some cases, you may want to merge classes in the top rows (below)

-----------------------------------------------------------------------------------

| Class Name A | Class Name B | Class Index A | Class Index B | Num Overlapping Examples | Joint Probability | |

|---|---|---|---|---|---|---|

| 0 | alt.atheism | talk.religion.misc | 0 | 19 | 14 | 0.001859 |

| 1 | comp.os.ms-windows.misc | comp.sys.ibm.pc.hardware | 2 | 3 | 10 | 0.001328 |

| 2 | misc.forsale | sci.electronics | 6 | 12 | 7 | 0.000929 |

| 3 | misc.forsale | rec.autos | 6 | 7 | 7 | 0.000929 |

| 4 | comp.os.ms-windows.misc | comp.windows.x | 2 | 5 | 5 | 0.000664 |

| ... | ... | ... | ... | ... | ... | ... |

| 185 | comp.sys.mac.hardware | rec.motorcycles | 4 | 8 | 0 | 0.000000 |

| 186 | comp.sys.mac.hardware | rec.sport.baseball | 4 | 9 | 0 | 0.000000 |

| 187 | comp.sys.mac.hardware | rec.sport.hockey | 4 | 10 | 0 | 0.000000 |

| 188 | comp.sys.mac.hardware | sci.crypt | 4 | 11 | 0 | 0.000000 |

| 189 | talk.politics.misc | talk.religion.misc | 18 | 19 | 0 | 0.000000 |

190 rows × 6 columns

* Overall, about 1% (55 of the 7,532) labels in your dataset have potential issues.

** The overall label health score for this dataset is: 0.99.

Generated with <3 from Cleanlab.

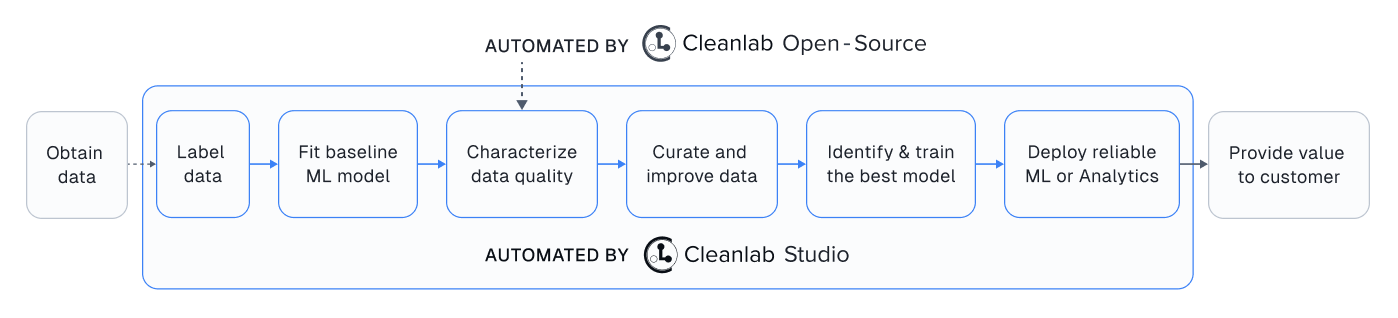

Spending too much time on data quality?#

Using this open-source package effectively can require significant ML expertise and experimentation, plus handling detected data issues can be cumbersome.

That’s why we built Cleanlab Studio – an automated platform to find and fix issues in your dataset, 100x faster and more accurately. Cleanlab Studio automatically runs optimized data quality algorithms from this package on top of cutting-edge AutoML & Foundation models fit to your data, and helps you fix detected issues via a smart data correction interface. Try it for free!