Classification with Structured/Tabular Data and Noisy Labels#

Consider Using Datalab

If interested in detecting a wide variety of issues in your tabular data, check out the Datalab tabular tutorial. Datalab can detect many other types of data issues beyond label issues, whereas CleanLearning is a convenience method to handle noisy labels with sklearn-compatible classification models.

In this 5-minute quickstart tutorial, we use cleanlab with scikit-learn models to find potential label errors in a classification dataset with tabular features (numeric/categorical columns). Tabular (or structured) data are typically organized in a row/column format and stored in a SQL database or file types like: CSV, Excel, or Parquet. Here we consider a Student Grades dataset, which contains over 900 individuals who have three exam grades and some optional notes, each being assigned a letter grade (their class label). cleanlab automatically identifies hundreds of examples in this dataset that were mislabeled with the incorrect final grade (data entry mistakes).

This tutorial shows how to handle noisy labels and produce more robust classification models for your own tabular datasets. cleanlab’s CleanLearning class automatically detects and filters out such badly labeled data, in order to train a more robust version of any Machine Learning model. No change to your existing modeling code is required!

Overview of what we’ll do in this tutorial:

Train a classifier model (here scikit-learn’s ExtraTreesClassifier, although any model could be used) and use this classifier to compute (out-of-sample) predicted class probabilities via cross-validation.

Identify potential label errors in the data with cleanlab’s

find_label_issuesmethod.Train a robust version of the same ExtraTrees model via cleanlab’s

CleanLearningwrapper.

Quickstart

Already have an sklearn compatible model, tabular data and given labels? Run the code below to train your model and get label issues.

from cleanlab.classification import CleanLearning

cl = CleanLearning(model)

_ = cl.fit(train_data, labels)

label_issues = cl.get_label_issues()

preds = cl.predict(test_data) # predictions from a version of your model

# trained on auto-cleaned data

Is your model/data not compatible with CleanLearning? You can instead run cross-validation on your model to get out-of-sample pred_probs. Then run the code below to get label issue indices ranked by their inferred severity.

from cleanlab.filter import find_label_issues

ranked_label_issues = find_label_issues(

labels,

pred_probs,

return_indices_ranked_by="self_confidence",

)

1. Install required dependencies#

You can use pip to install all packages required for this tutorial as follows:

!pip install cleanlab

# Make sure to install the version corresponding to this tutorial

# E.g. if viewing master branch documentation:

# !pip install git+https://github.com/cleanlab/cleanlab.git

[2]:

import random

import numpy as np

import pandas as pd

from sklearn.preprocessing import StandardScaler, LabelEncoder

from sklearn.model_selection import cross_val_predict, train_test_split

from sklearn.metrics import accuracy_score

from sklearn.ensemble import ExtraTreesClassifier

from cleanlab.filter import find_label_issues

from cleanlab.classification import CleanLearning

SEED = 100

np.random.seed(SEED)

random.seed(SEED)

2. Load and process the data#

We first load the data features and labels (which are possibly noisy).

[3]:

grades_data = pd.read_csv("https://s.cleanlab.ai/grades-tabular-demo-v2.csv")

grades_data.head()

[3]:

| stud_ID | exam_1 | exam_2 | exam_3 | notes | letter_grade | |

|---|---|---|---|---|---|---|

| 0 | f48f73 | 53.00 | 77.00 | 9.00 | 3 | C |

| 1 | 0bd4e7 | 81.00 | 64.00 | 80.00 | great participation +10 | B |

| 2 | 0bd4e7 | 81.00 | 64.00 | 80.00 | great participation +10 | B |

| 3 | cb9d7a | 0.61 | 0.94 | 0.78 | NaN | C |

| 4 | 9acca4 | 48.00 | 90.00 | 9.00 | 1 | C |

[4]:

X_raw = grades_data[["exam_1", "exam_2", "exam_3", "notes"]]

labels_raw = grades_data["letter_grade"]

Next we preprocess the data. Here we apply one-hot encoding to features with categorical data, and standardize features with numeric data. We also perform label encoding on the labels, as cleanlab’s functions require the labels for each example to be an interger integer in 0, 1, …, num_classes - 1.

[5]:

categorical_features = ["notes"]

X_encoded = pd.get_dummies(X_raw, columns=categorical_features, drop_first=True)

numeric_features = ["exam_1", "exam_2", "exam_3"]

scaler = StandardScaler()

X_processed = X_encoded.copy()

X_processed[numeric_features] = scaler.fit_transform(X_encoded[numeric_features])

encoder = LabelEncoder()

encoder.fit(labels_raw)

labels = encoder.transform(labels_raw)

Bringing Your Own Data (BYOD)?

You can easily replace the above with your own tabular dataset, and continue with the rest of the tutorial.

labels) should be represented as integer indices 0, 1, …, num_classes - 1.labels might look like: np.array([2,0,0,1,2,0,1])3. Select a classification model and compute out-of-sample predicted probabilities#

Here we use a simple ExtraTrees classifier that fits various randomized decision tress on our data, but you can choose any suitable scikit-learn model for this tutorial.

[6]:

clf = ExtraTreesClassifier()

To find potential labeling errors, cleanlab requires a probabilistic prediction from your model for every datapoint. However, these predictions will be overfitted (and thus unreliable) for examples the model was previously trained on. For the best results, cleanlab should be applied with out-of-sample predicted class probabilities, i.e., on examples held out from the model during the training.

K-fold cross-validation is a straightforward way to produce out-of-sample predicted probabilities for every datapoint in the dataset by training K copies of our model on different data subsets and using each copy to predict on the subset of data it did not see during training. An additional benefit of cross-validation is that it provides a more reliable evaluation of our model than a single training/validation split. We can implement this via the cross_val_predict method from scikit-learn:

[7]:

num_crossval_folds = 5

pred_probs = cross_val_predict(

clf,

X_processed,

labels,

cv=num_crossval_folds,

method="predict_proba",

)

4. Use cleanlab to find label issues#

Based on the given labels and out-of-sample predicted probabilities, cleanlab can quickly help us identify poorly labeled instances in our data table. For a dataset with N examples from K classes, the labels should be a 1D array of length N and predicted probabilities should be a 2D (N x K) array. Here we request that the indices of the identified label issues be sorted by cleanlab’s self-confidence score, which measures the quality of each given label via the probability assigned to it in our model’s prediction.

[8]:

ranked_label_issues = find_label_issues(

labels=labels, pred_probs=pred_probs, return_indices_ranked_by="self_confidence"

)

print(f"Cleanlab found {len(ranked_label_issues)} potential label errors.")

Cleanlab found 212 potential label errors.

Let’s review some of the most likely label errors:

[9]:

X_raw.iloc[ranked_label_issues].assign(label=labels_raw.iloc[ranked_label_issues]).head()

[9]:

| exam_1 | exam_2 | exam_3 | notes | label | |

|---|---|---|---|---|---|

| 456 | 58.0 | 92.0 | 93.0 | NaN | D |

| 827 | 99.0 | 86.0 | 74.0 | NaN | D |

| 637 | 0.0 | 79.0 | 65.0 | cheated on exam, gets 0pts | A |

| 120 | 0.0 | 81.0 | 97.0 | cheated on exam, gets 0pts | B |

| 233 | 68.0 | 83.0 | 76.0 | NaN | F |

These final grades look suspicious and should definitely be carefully re-examined! This is a straightforward approach to visualize the rows in a data table that might be mislabeled.

5. Train a more robust model from noisy labels#

Following proper ML practice, let’s split our data into train and test sets.

[10]:

X_train, X_test, labels_train, labels_test = train_test_split(

X_encoded,

labels,

test_size=0.2,

random_state=SEED,

)

We again standardize the numeric features, this time fitting the scaling parameters solely on the training set.

[11]:

scaler = StandardScaler()

X_train[numeric_features] = scaler.fit_transform(X_train[numeric_features])

X_test[numeric_features] = scaler.transform(X_test[numeric_features])

Let’s now train and evaluate the original ExtraTrees model.

[12]:

clf.fit(X_train, labels_train)

acc_og = clf.score(X_test, labels_test)

print(f"Test accuracy of original model: {acc_og}")

Test accuracy of original model: 0.783068783068783

cleanlab provides a wrapper class that can be easily applied to any scikit-learn compatible model. Once wrapped, the resulting model can still be used in the exact same manner, but it will now train more robustly if the data have noisy labels.

[13]:

clf = ExtraTreesClassifier() # Note we first re-initialize clf

cl = CleanLearning(clf) # cl has same methods/attributes as clf

The following operations take place when we train the cleanlab-wrapped model: The original model is trained in a cross-validated fashion to produce out-of-sample predicted probabilities. Then, these predicted probabilities are used to identify label issues, which are then removed from the dataset. Finally, the original model is trained on the remaining clean subset of the data once more.

[14]:

_ = cl.fit(X_train, labels_train)

We can get predictions from the resulting model and evaluate them, just like how we did it for the original scikit-learn model.

[15]:

preds = cl.predict(X_test)

acc_cl = accuracy_score(labels_test, preds)

print(f"Test accuracy of cleanlab-trained model: {acc_cl}")

Test accuracy of cleanlab-trained model: 0.8095238095238095

We can see that the test set accuracy slightly improved as a result of the data cleaning. Note that this will not always be the case, especially when we evaluate on test data that are themselves noisy. The best practice is to run cleanlab to identify potential label issues and then manually review them, before blindly trusting any accuracy metrics. In particular, the most effort should be made to ensure high-quality test data, which is supposed to reflect the expected performance of our model during deployment.

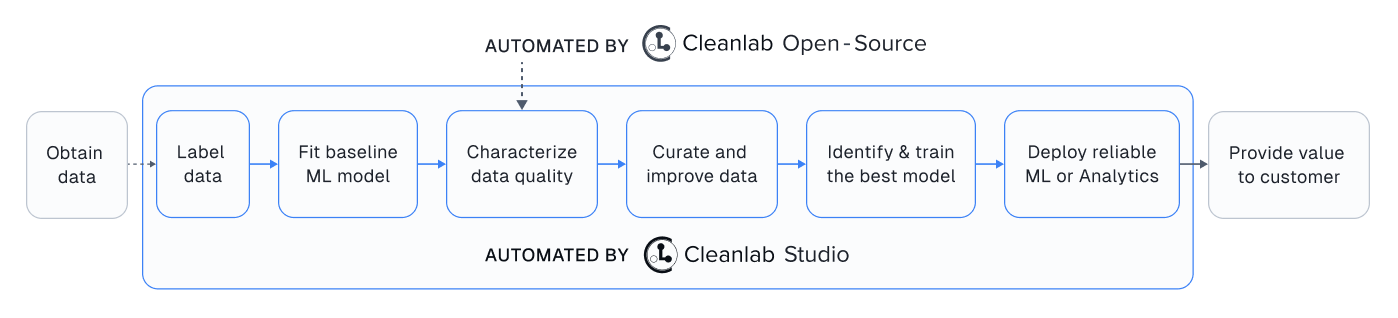

Spending too much time on data quality?#

Using this open-source package effectively can require significant ML expertise and experimentation, plus handling detected data issues can be cumbersome.

That’s why we built Cleanlab Studio – an automated platform to find and fix issues in your dataset, 100x faster and more accurately. Cleanlab Studio automatically runs optimized data quality algorithms from this package on top of cutting-edge AutoML & Foundation models fit to your data, and helps you fix detected issues via a smart data correction interface. Try it for free!